Setting Up My Own Private Coding Assistant with LLaMA 4

This week I have been playing with setting up my private LLM to help me program. I have heard a lot about the next-gen coding assistants that could/would take our developer jobs, and so I wanted to see if there was anything to it. After doing some research, I realized that running llama4 would require a TON of processing power, aka GPUs, and so I decided to rather run the actual LLM in a cluster on runpods, and simply access it from my local environment. Here are my steps

- Create an account on runpods, and create (deploy) a pod with the at least 96GB VRAM and 4 GPUs. Make sure to expose port 11434 so that we can connect to it later from our local environment.

- SSH into the container, and install ollama and run it.

- Download Llama 4 (took forever to download), and run it.

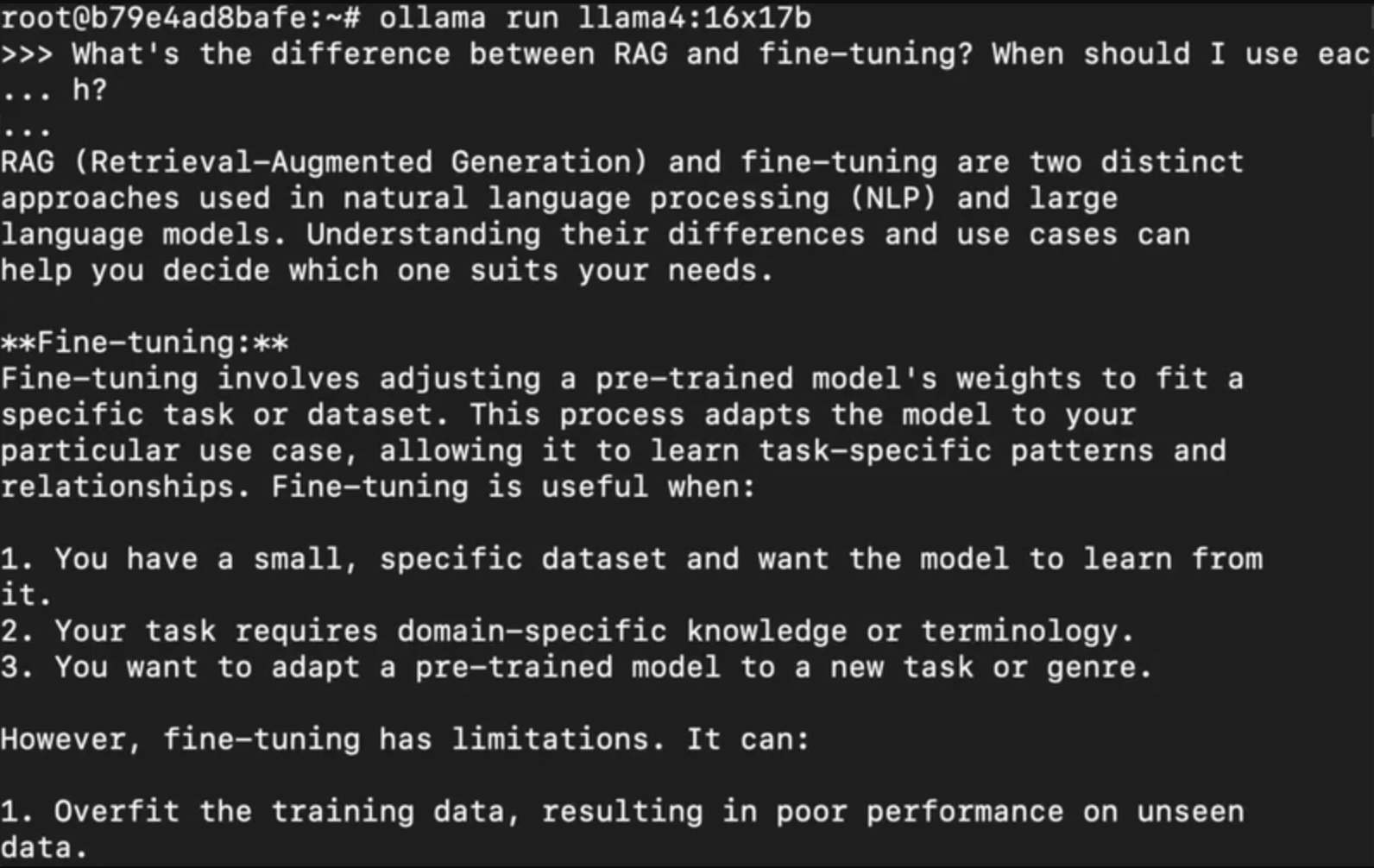

At this point we can already ask it questions through the terminal.

In the next tutorial I am going to walk you through the steps on how to locally spin up a docker container running OpenWebUI.This week, I decided to dive into something I’ve been curious about for a while: setting up my own private LLM to help with coding. With all the buzz around next-gen AI coding assistants—some even claiming they'll replace developers—it felt like the right time to see what’s really possible.

At first, I assumed I could just run one of these models locally on my own machine. How hard could it be, right? That illusion didn’t last long. After digging into some documentation, I realized LLaMA 4 demands an outrageous amount of computing power—specifically, something like 96GB of VRAM and ideally four GPUs. Yeah, my laptop wasn’t going to cut it.

Rather than giving up, I turned to RunPod, a service that lets you rent powerful GPU infrastructure on demand. It seemed like the perfect workaround: I could run the actual model in the cloud and connect to it from my local environment whenever I needed.

Once I created an account on RunPod, I spun up a pod with the right specs. The key was selecting a machine with at least 96GB of VRAM and ensuring that port 11434 was exposed, since that’s how I’d be connecting to the model later. Once the pod was running, I connected to it via SSH and got to work.

Inside the container, I installed Ollama—a neat little tool that makes working with LLMs surprisingly straightforward.

curl -fsSL https://ollama.com/install.sh | shWith Ollama in place, I started downloading LLaMA 4, which honestly took forever. But eventually, the model was ready to run.

When I finally fired it up, I was able to chat with it directly from the terminal. No fancy UI, just raw command-line interaction with a seriously capable language model. And honestly? It was kind of amazing. I could ask it questions, get code suggestions, and brainstorm ideas—all without needing a public API key or sending my code snippets to some external service.

Of course, using the terminal isn’t exactly the most pleasant experience long-term. So in the next post, I’ll show you how I set up OpenWebUI in a local Docker container to give this model a proper user interface. It’s way more fun to use and makes the whole thing feel like a polished, personal coding assistant.

For now, though, I’m already impressed by what’s possible with just a few tools and a little rented compute power. The AI hype may be real—but it turns out, it’s also something you can play with yourself.

Comments ()